Using Experiments to Improve Agents

Learn how to use experiments to compare different agent configurations, test variations, and optimize for cost, speed, and accuracy

Experiments

Throughout the process of creating a new agent, in order to make the best agent possible, you may need to:

- Compare quality, cost and speed across different models (GPT-5, Claude 4 Sonnet, Gemini 2.0 Flash, etc.)

- Test multiple prompt variations to find which produces the most accurate, useful, or appropriately-toned outputs

- Optimize for specific metrics like cost, speed, and accuracy.

Experiments allow you to systematically compare each of these different parameters of your agent to find the optimal setup for your use case across one or more test inputs (inputs being: the starting data you give your agent to process).

Creating Experiments

To create an experiment:

Configure MCP

Make sure you have the AnotherAI MCP configured and enabled. You can view the set up steps here.

Create Experiment

Then just ask your preferred AI assistant to set up experiments for you. We'll cover some common examples and sample messages you can use below.

The most common parameters to experiment with are prompts and models, however you can also experiment with changes to other parameters like temperature.

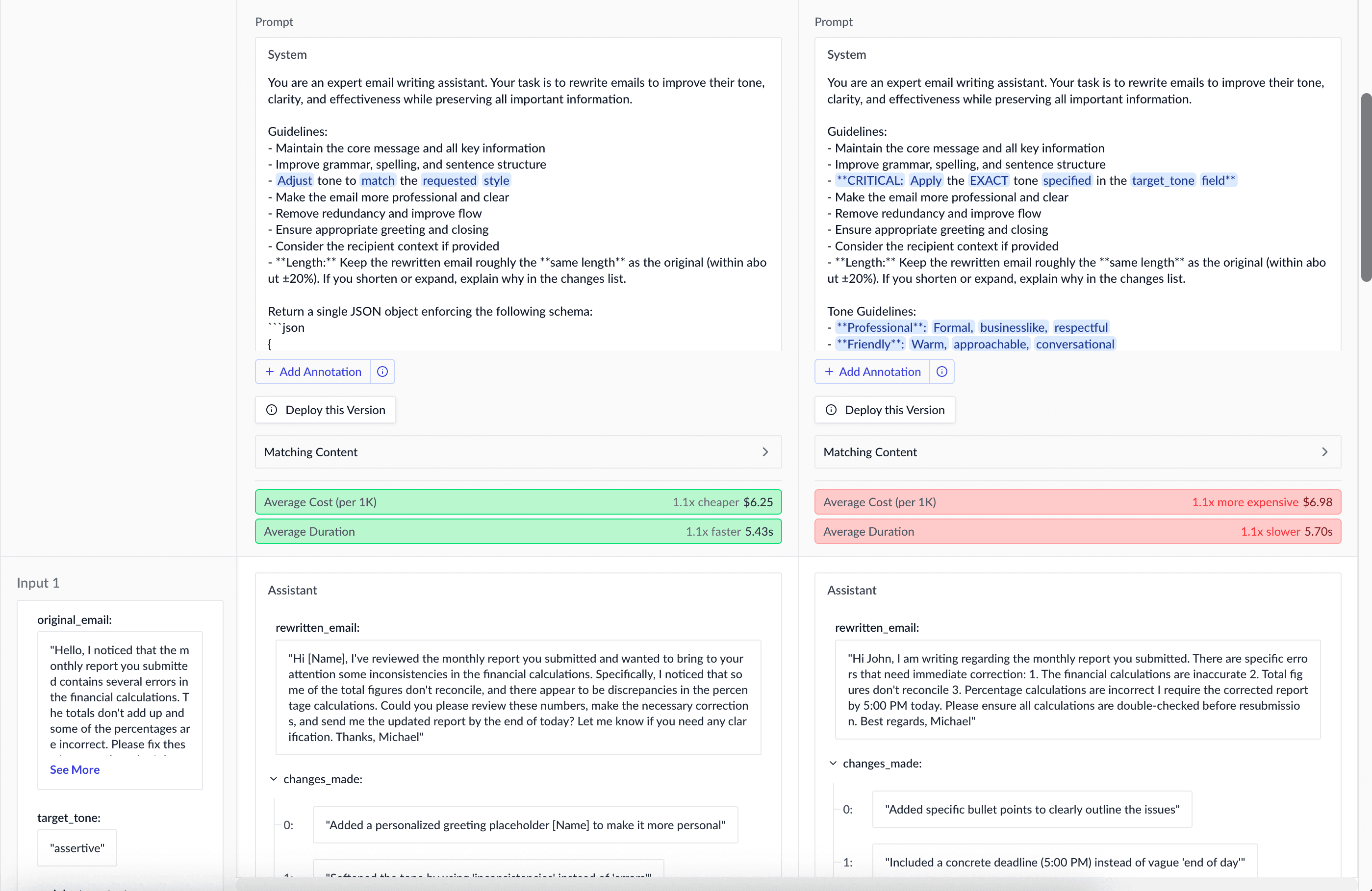

Prompts

Comparing different prompts is one of the most effective ways to improve your agent's performance. Small changes in wording, structure, or examples can lead to significant improvements. If you notice an issue with an existing prompt, you can even ask your AI assistant to generate prompt variations to use in the experiment.

Example:

Look at the prompt of anotherai/agent/email-rewriter and create an experiment in AnotherAI

that compares the current prompt with a new prompt that better emphasizes adopting

the tone lists in the input Your AI assistant will create the experiment and give you an initial analysis of the results and well as a URL to view the results in the AnotherAI web app.

You can use the provided URL to view the results in the AnotherAI web app to perform manual analysis of the results.

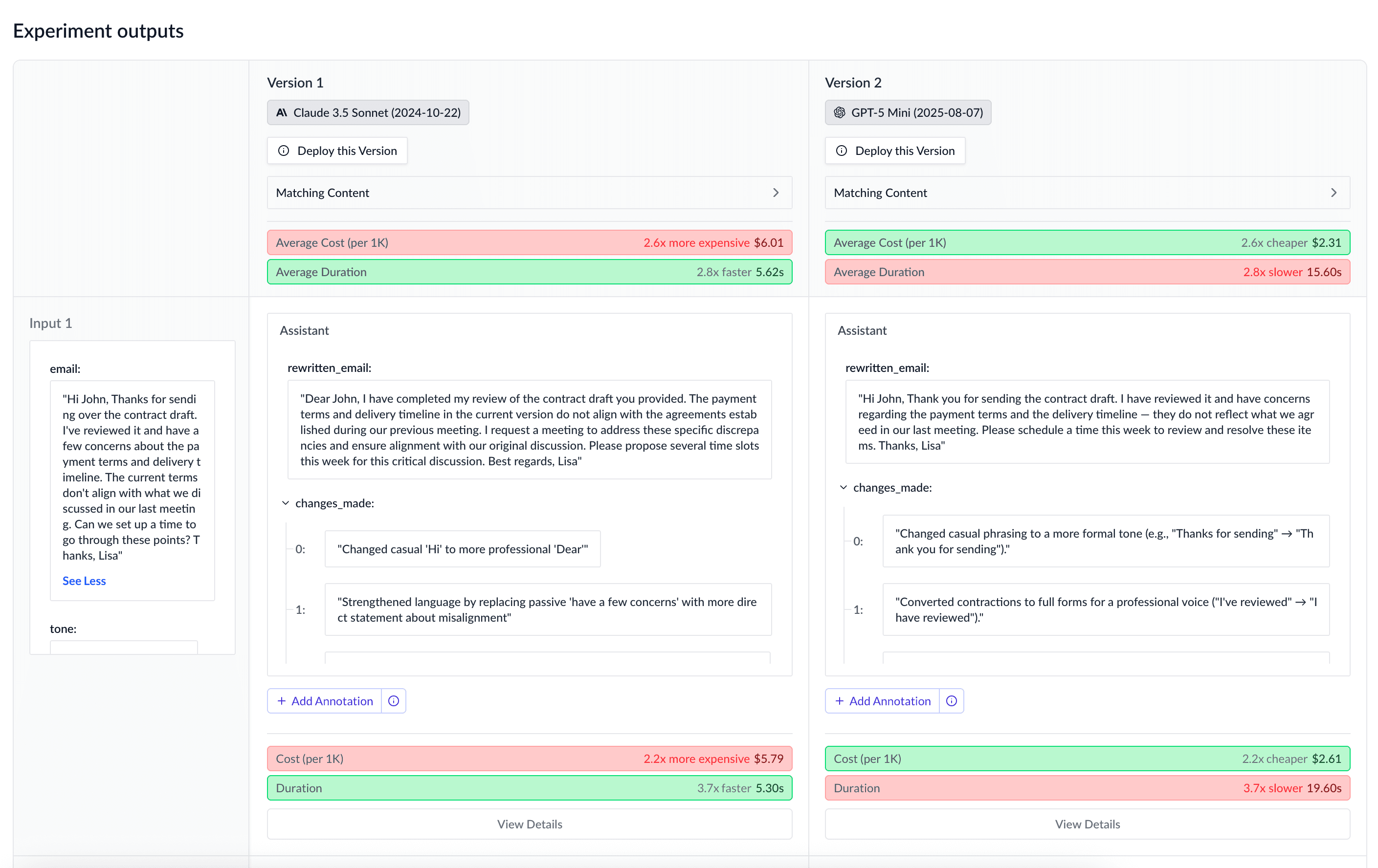

Models

Different models excel at different tasks. AnotherAI supports over 100 different models, and experiments can help you choose the right model for your agent, depending on its needs.

Example:

Create an AnotherAI experiment to help me find a faster model for anotherai/agent/email-rewriter,

but still maintains the same tone and verbosity considerations as my current model.If you have a specific model in mind that you want to try - for example, a newly released model - you can ask your AI assistant to help you test that model against your existing agent version. You can always request that your AI assistant use inputs from existing completions, to ensure that you're testing with real, production data.

Example:

Can you retry the last 5 completions of anotherai/agent/email-rewriter and compare the outputs with

GPT 5 mini?

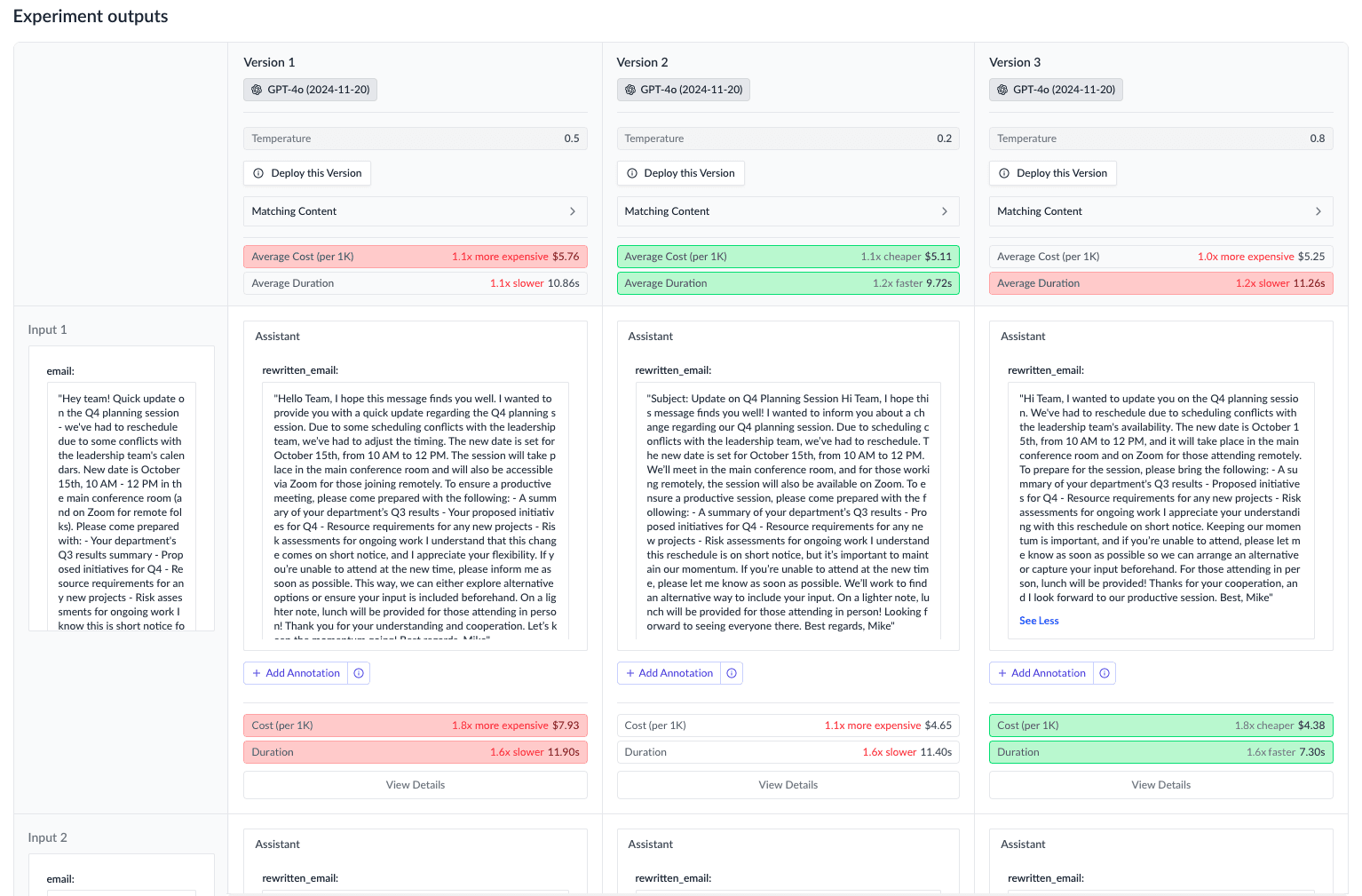

Other Parameters

Beyond prompts and models, fine-tuning other parameters can impact your agent's behavior and output quality. Temperature in particular can have a significant impact on the quality of the output.

Example:

Test my email-rewriter agent with temperatures 0.2, 0.5, and 0.8 to find

the right balance between creativity and professionalism

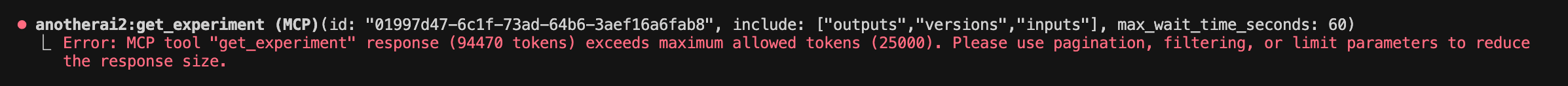

Managing Large Experiments with Claude Code

If you're testing an agent that has a large system prompt and/or very long inputs, you may encounter token limit issues with the get_experiment MCP tool that impacts Claude Code's ability to provide accurate insights on your agent.

In this case, you can manually increase Claude Code's output token limit.

To set up permanently for all terminal sessions:

For zsh (default on macOS):

echo 'export MAX_MCP_OUTPUT_TOKENS=150000' >> ~/.zshrc && source ~/.zshrcFor bash:

echo 'export MAX_MCP_OUTPUT_TOKENS=150000' >> ~/.bashrc && source ~/.bashrcFor temporary use in current session only:

export MAX_MCP_OUTPUT_TOKENS=150000Notes:

- If you forget or don't realize you need to set a higher limit, you can quit your existing session, run the command to increase the limit, and then use

claude --resumeto continue your previous session with the increased limit applied.

You can learn more about tool output limits for Claude Code in their documentation.

Tips:

- When creating experiments from your codebase, always reference the specific files of your agent when requesting experiments to avoid any ambiguity about what should be tested

- When not in your codebase (for example, when using ChatGPT), you can reference the agent by the agent_id found in AnotherAI (ex. anotherai/agent/email-rewriter) to avoid any ambiguity about what should be tested

- Pick one variable to test with at a time (ex. models, prompts) to make sure that you can easily attribute a given variable on the agent's changes in performance.

Analyzing Experiment Results

Once your experiment has been created, you can:

- Review your AI assistant's analysis of the results (and ask follow up questions if needed)

- Review side-by-side comparisons in the AnotherAI experiments view

- Use annotations to mark which outputs are better and why (keep reading to learn more about annotations!)

Other Ways to Improve your Agents

How is this guide?

Migrating an Existing Agent

Learn how to migrate agents currently using the OpenAI SDK, WorkflowAI, or other LLM SDKs to AnotherAI, and add features like input variables and structured outputs

Using Annotations to Improve Agents

Learn how to use annotations to provide specific feedback on completions and experiments, enabling your AI coding agent to improve your agents based on real-world performance