Using End-User Feedback to Improve Agents

Collect and integrate end-user feedback to improve your agents

End-User Feedback

Incorporating end-user feedback into your agent's development process can be invaluable when creating effective agents. We recommend setting up user feedback in your product to be added directly to completions via annotations.

Below is an example of the process you might implement for collecting and incorporating end-user feedback:

Set up user feedback collection in your product

This will look different for each product. Generally we recommend allowing the user to provide a comment, so that they can provide nuanced feedback. You may also want to allow your user to leave a score - for example 1-5 stars, or a thumbs up/down - in these cases the feedback would be added as a metric in the annotations.

Example of a user feedback component:

Send user feedback to AnotherAI via the annotations endpoint

Ask your AI coding assistant to help integrate user feedback with AnotherAI:

Create a function that sends user feedback to AnotherAI via the annotations API.

The function should accept:

- completion_id from the AnotherAI response

- a numeric rating (e.g., 1-5 stars)

- a text comment from the user

- user identifier for tracking who submitted the feedback

Use the AnotherAI annotations endpoint to store this feedback so it appears

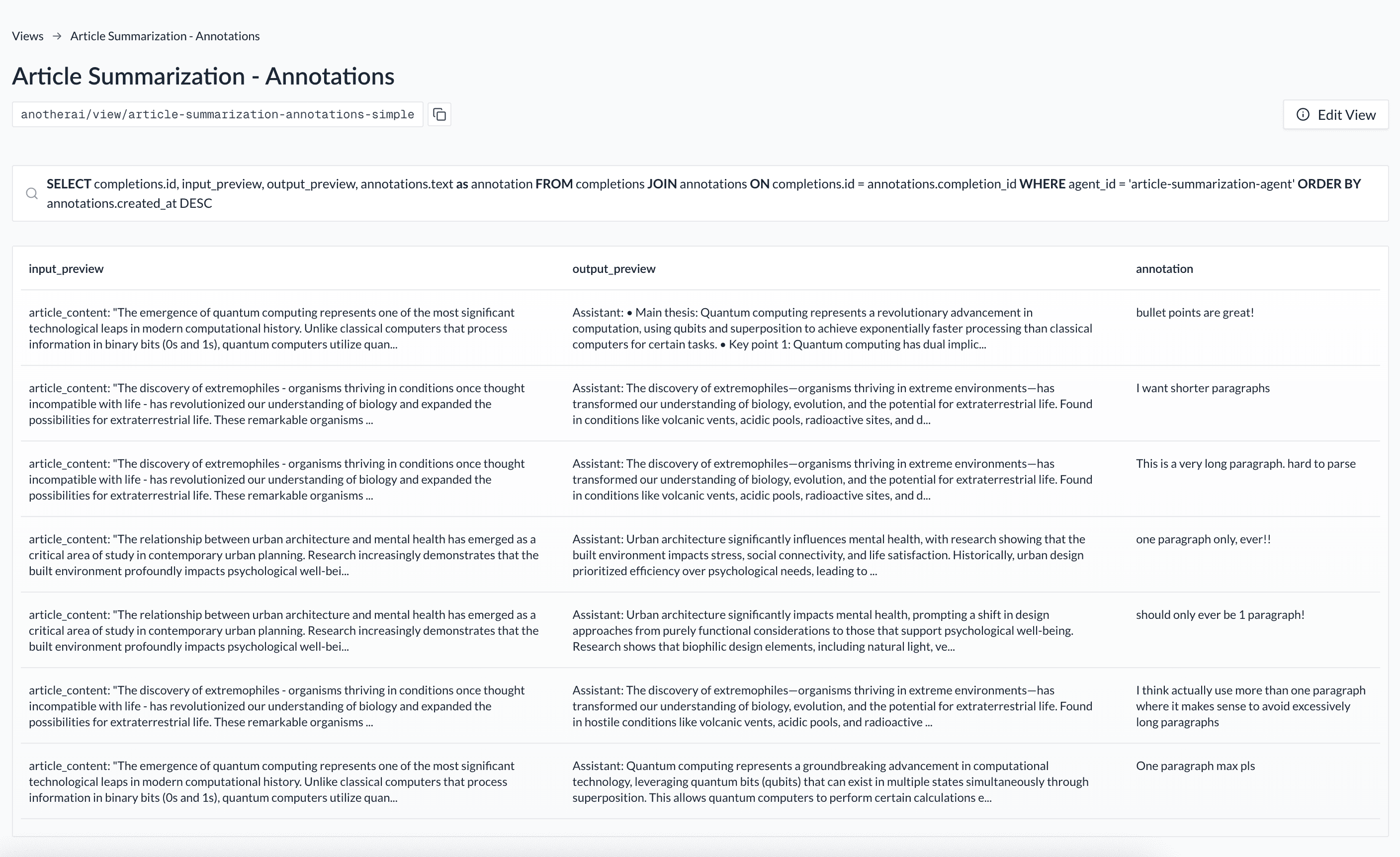

alongside the completion in the AnotherAI dashboard.Create a custom view to easily review the feedback sent

Ask your AI assistant to create a view for you in AnotherAI to see all the feedback in one place.

Create a view that shows all completions of anotherai/agent/[your-agent-name] with annotations.

The view should display the completion ID, input, outputs, and the annotation

left on the completion.

Using Feedback for Improvements

Ask your AI assistant to analyze user feedback and suggest improvements:

Review the user feedback annotations for agent/email-rewriter from the last week

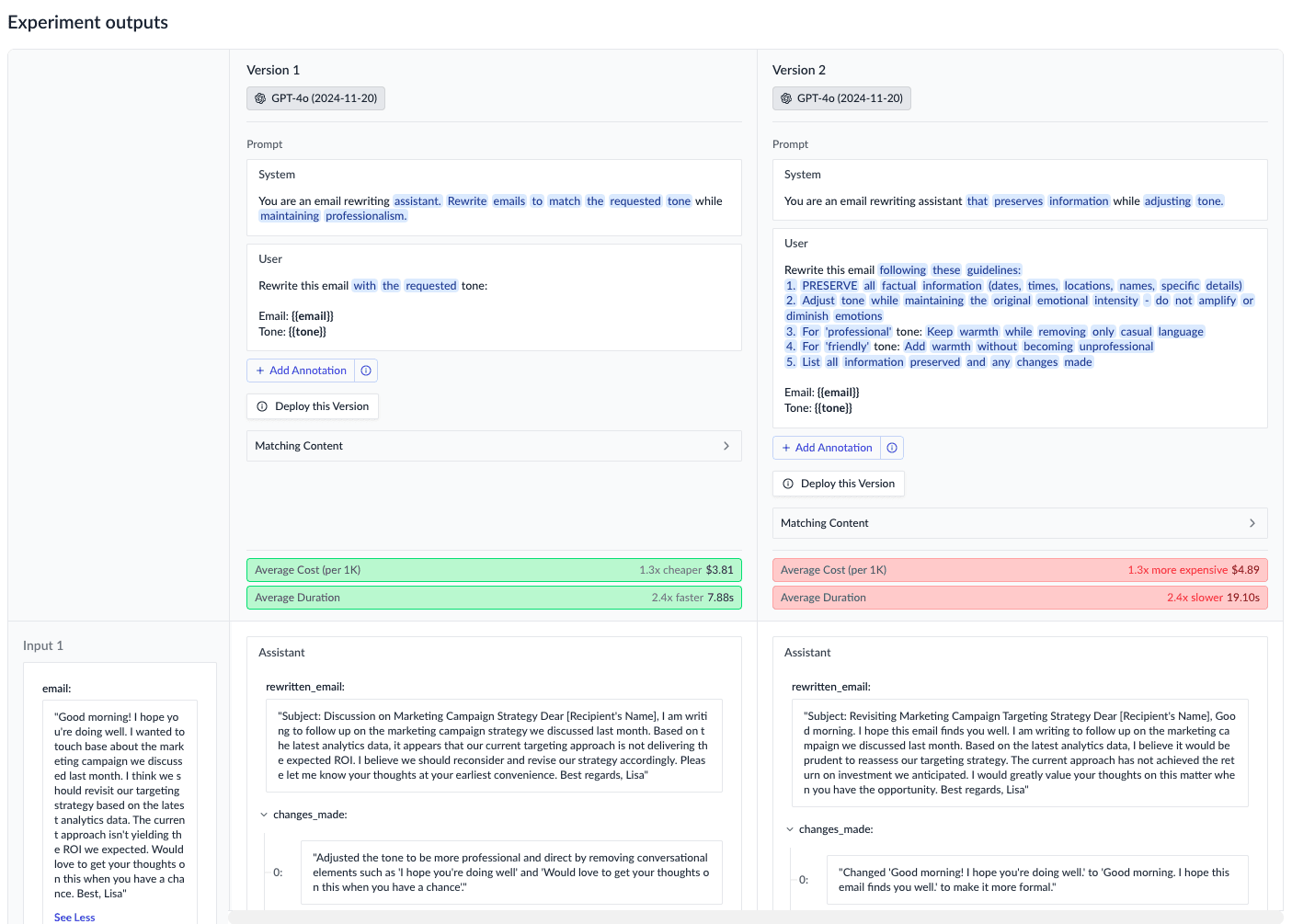

and suggest prompt improvements based on common complaintsYour AI assistant will query the annotations, identify patterns, and propose specific changes to improve user satisfaction.

Once you've validated improvements through experiments, you can deploy them instantly without code changes using deployments. This allows your team to rapidly iterate on agent improvements based on user feedback - no engineering bottlenecks, no deployment delays.

Other Ways to Improve your Agents

How is this guide?